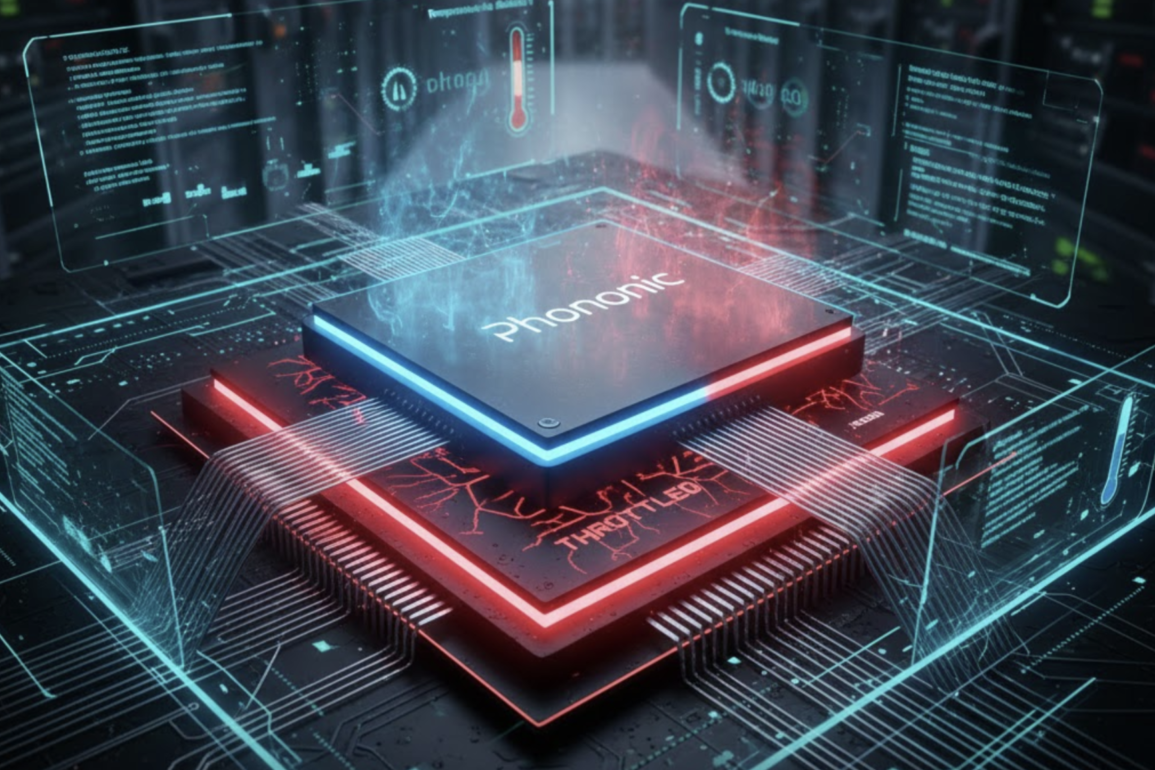

The soaring power demands of Artificial Intelligence (AI) are colliding with a fundamental challenge in data centers: heat. As AI workloads become more complex and variable, the resulting temperature spikes force high-performance chips, like those in xPUs and HBMs, to throttle their performance by as much as 30% to avoid burning out. To manage this heat, operators currently overprovision cooling by up to 78%, creating a major drag on efficiency and cost.

Addressing this critical bottleneck, Phononic, a leader in solid state cooling technology, has introduced the “Thermal Kit,” a precise, performance-enhancing cooling solution designed specifically for AI data centers.

The technology integrates high-performance thermoelectric coolers (TECs), devices that use the Peltier effect to create a cold side and a hot side—into the node architecture. The Thermal Kit works by acting as a surgical complement to existing bulk liquid cooling systems.

Rather than relying solely on slow-to-react mechanical chillers, Phononic’s solution provides active, chip-level hotspot thermal control that adjusts in milliseconds. This rapid response is crucial for managing the unpredictable thermal load changes generated by dynamic AI processes, such as large language model (LLM) inference and generative model training.

“The Thermal Kit is designed to meet one of the biggest challenges of today’s AI data centers: cooling,” Phononic SVP and GM, Infrastructure Solutions Matt Langman, told Afcacia. “For operators facing unprecedented power demands for today’s AI workloads, it is mission critical to maintain performance through reductions in thermal throttling and optimized energy use of existing liquid-cooled infrastructure. With this breakthrough, customers can unlock higher compute capability and deliver meaningful data center wide ROI.”

The system is controlled by API-accessible firmware and software that intelligently monitors workload demands and proactively manages the heat. By providing precise cooling exactly where and when it’s needed, the Thermal Kit delivers attractive returns on infrastructure investment.

When GPUs overheat, performance drops rapidly as thermal throttling kicks in and firmware lowers clock speeds to save the silicon; efficient, targeted cooling from the kit optimizes temperatures to significantly reduce throttling and improve runtimes for intensive AI tasks, effectively providing a performance boost without needing to redesign the underlying chip.

This targeted approach enhances asset utilization by reducing performance drops, enabling hyperscalers to extract more capacity from their existing infrastructure. Furthermore, consistent temperature control reduces stress on critical components, increasing their useful life and cutting down on failures in high-value AI hardware.

Finally, running the secondary cooling loop several degrees warmer reduces chiller load and energy use, leading to improved energy efficiency and freeing up energy capacity for reinvestment into additional infrastructure.

Phononic’s technology, which is already trusted by Tier 1 hyperscalers for optical transceiver cooling, aims to become the standard for optimizing the performance and efficiency of the next generation of highly dense, liquid-cooled AI data centers.

The long-term bet, both by Phononic and its data center partners, is that by providing surgical, solid-state cooling at the chip level, they can eliminate the thermal bottleneck that has silently limited the true potential of the AI revolution.